Soundscapes and Wellbeing Talk

Interdisciplinary Perspectives on Soundscapes and Wellbeing workshop

This post is a transcription of a flash talk I gave a few weeks ago at the Interdisciplinary Perspectives on Soundscapes and Wellbeing workshop. The event was hosted by the Environmental Psychology Research Group, University of Surrey and part funded by The UK Acoustics Network Plus (UKAN+), AI4S.surrey.ac.uk, School of Psychology University of Surrey. I was fortunate to have the opportunity to spend time with a wonderful group of people talking shop. The expanse of approaches being moulded together was motivating, with work from city planning, urban design, neuroscience, AI, citizen science, law, health and wellbeing, art, biodiversity, and more! The event was a testament creativity within the field, and something I’m looking forward to giving more time to this year.

Hi I'm Thomas Deacon, a design researcher in the AI for Sound project working with Mark Plumbley.

My role is to imagine new possibilities and question our ideas to ensure they align with people's values and consider the wider impact of technology.

Our research focuses on the applications of Machine Listening for enhancing wellbeing. This involves training machines or computer systems to understand and interpret human auditory information.

However, the process of Machine Listening relies on capturing and representing expected 'modes of listening' through artifacts like datasets and statistical models. But the output of such systems may not be easily comprehensible to humans given a mismatch between the subjective and objective. To address this, we recognize the need for alternative approaches that encompass other modes of listening, aiming to make AI less normative.

We tackle this problem through participatory design and the use of creative methods.

Today I'll highlight two works around personalised soundscapes that aim to support wellbeing.

We often think of a soundscape as intrinsic to its location. Still, personalised soundscapes via technology present an opportunity to alter our sonic environments without physically going anywhere else—this leads us to two questions.

How do we capture data that can support meaningful personalisation for wellbeing and what are our ethical needs in this sort of future technology?

In a study exploring how we can gather and understand indoor soundscape data using audiomoths, we needed to engage people in the process of making annotations and reflecting on their sound environment. To do this we felt it was important to make things engaging, so I designed a series of activities to provide a form of deep listening practice. It was technology based as we used a mobile app to prompt participants at different times of day to engage in listening activities.

Looking at one activity, in “Find the Hidden Sounds” we asked:

Hearing gets to places where sight cannot. Ears see through walls and around corners. When something is hidden, sound will reveal its location and meaning. Make a list of all the sounds you can think of that come from hidden places, sounds that are made by objects you have never seen.

From this study, we begin to understand people’s conceptions of place, sound, and how they link to memories. What we are beginning to see is that engaging with everyday sounds can be fun, thought-provoking, and for one lady it was a form of pain distraction.

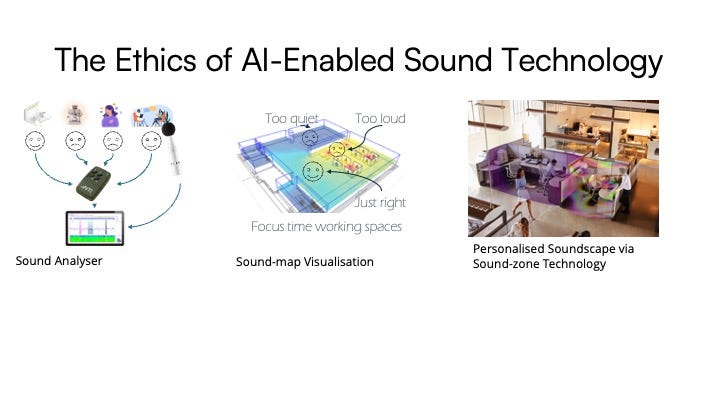

Now, imagine a world where AI soundscape technology is highly efficient and affordable. This is made possible by scaled improvements in innovations over time, to the point where it can be easily installed in your workplace.

This scenario presents ethical challenges related to the widespread adoption of advanced sound monitoring systems. To explore this, we conducted focus groups using thought experiments involving utopias and dystopias, to help us understand people's preferences regarding workplace sound technologies like soundscape generators and sound-map visualisations.

The feedback we got challenges some of the underlying logics behind the systems in the thought experiments. Prompting us to develop new technology concepts and methods to engage stakeholders.

To wrap up, instead of treating human subjectivities as separate from technological tools they interact with; we should consider AI that is centred around peoples’ needs rather than solely focusing on machine capable outcomes. As we continue exploring these avenues - our objective isn’t just creating intelligent systems but using them for creating empathetic, restorative, and engaging spaces.

Sorry about the export quality on the slide, next time they will be better. Ta ta!